Update!

We recommend using the new custom attestations instead of generic attestations. Please see these two new blog posts:

- Migrating from Generic to Custom Attestations: A zero-trust approach to compliance

- Moving to a zero-trust model with Kosli’s custom attestations

All but one of the kosli attest commands calculate the true/false compliance value

for you based on their type. For example, kosli attest snyk can read the sarif

output file produced by a snyk scan.

The one that doesn’t is kosli attest generic which is “type-less”. It can attest anything, but Kosli cannot calculate a true/false compliance value for you. Often the tool you are using can generate the true/false value, which is then easy to capture.

In this post we’ll look at the typical steps involved in making a generic attestation when you have to calculate the true/false compliance value yourself. The example we’ll use is from cyber-dojo’s differ microservice (written in Ruby) which gathers coverage metrics as part of its unit test run.

- There are two coverage metrics,

totalandmissed, which we gather for both the number of lines, and for the number of branches. - We’ll define some compliance threshold rules for these metrics.

- We’ll write a script to compare the metrics gathered against these thresholds.

- We’ll add steps to our CI workflow to run the script, and report its result to Kosli using the

kosli attest genericCLI command. - Finally, we’ll consider situations when there might be preferable alternatives to

kosli attest generic.

Gather your data

As is often the case, gathering the new data is relatively simple. In our example we need only add an extra reporter to the coverage library…

require 'simplecov'

require_relative 'simplecov_formatter_json' # <<<<

SimpleCov.start do

...

test_re = %r{^/differ/test}

add_group('code') { |src| src.filename !~ test_re }

end

formatters = [

SimpleCov::Formatter::HTMLFormatter,

SimpleCov::Formatter::JSONFormatter # <<<<

]

SimpleCov.formatters = SimpleCov::Formatter::MultiFormatter.new(formatters)

…and implement the JSONFormatter to save the required metrics data.

For example:

require 'simplecov'

require 'json'

module SimpleCov

module Formatter

class JSONFormatter

def format(result)

data = { ... }

result.groups.each do |name, file_list|

data[name] = {

lines: {

total: file_list.lines_of_code,

missed: file_list.missed_lines

},

branches: {

total: file_list.total_branches,

missed: file_list.missed_branches

}

}

end

File.open(output_filepath, 'w+') do |file|

file.print(JSON.pretty_generate(data))

end

data.to_json

end

...

When we run our unit-tests, this reporter generates a json file called coverage_metrics.json containing the required metrics values:

{ "code": {

"lines": {

"total": 352,

"missed": 0

},

"branches": {

"total": 60,

"missed": 1

}

},

...

}

Define your compliance threshold rules

There are many ways we do this too. We’ve chosen to write them a ruby source file.

For example:

def metrics

[

# limit the size of our microservice

[ 'code.lines.total' , '<=', 500 ],

# enforce full statement coverage

[ 'code.lines.missed' , '<=', 0 ],

# limit the complexity of our microservice

[ 'code.branches.total' , '<=', 75 ],

# enforce (almost) full branch coverage

[ 'code.branches.missed', '<=', 1 ],

]

end

Process the data

Again, there are many ways we could process the gathered data against the compliance threshold rules. We’ve chosen to write:

- a ruby script to process the json values produced by a unit-test run against these threshold rules (see example).

- a bash script to execute this ruby script in a docker container with appropriate volume mounts. The bash script produces a zero

$?exit status when all threshold rules are true, and non-zero when any are false (see example). - a makefile target that calls the bash script (see example).

Attest the result in your CI workflow

To instrument our CI workflow we:

- Add a step to call the makefile target

- Add a step to install the Kosli CLI

- Add a step calling

kosli attest genericwith the result of the first step.

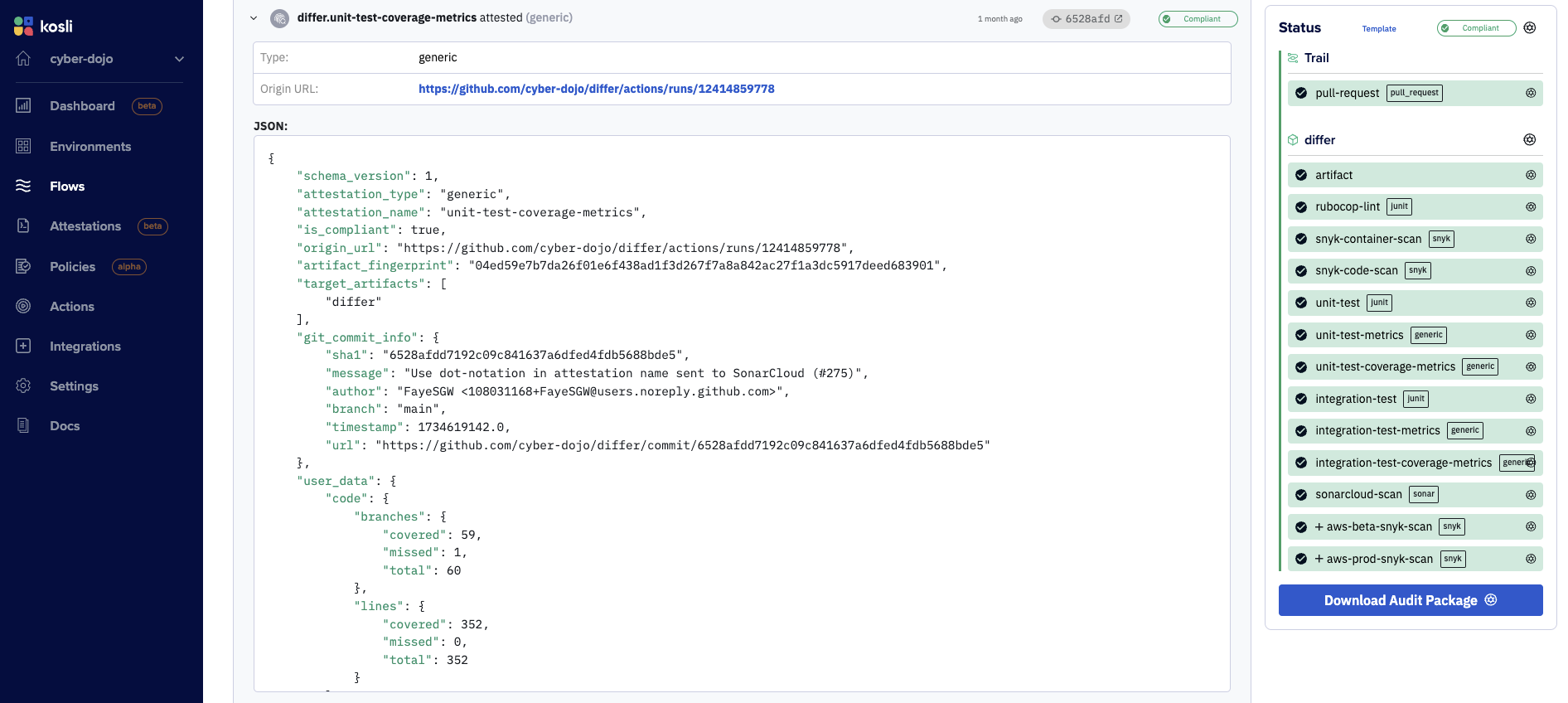

We’ll also use the--user-dataflag to send the json file containing the metrics values. See the resulting attestation in a Kosli Trail.

on:

...

env:

KOSLI_FLOW: ...

KOSLI_TRAIL: ...

...

jobs:

...

unit-tests:

runs-on: ubuntu-latest

needs: [build-image]

env:

KOSLI_FINGERPRINT: ${{ needs.build-image.outputs.artifact_digest }}

steps:

...

- name: Run unit tests

run:

make unit_test

- name: Check coverage metrics

id: coverage_metrics

run:

make unit_test_coverage_metrics

- name: Setup Kosli CLI

if: ${{ github.ref == 'refs/heads/main' && (success() || failure()) }}

uses: kosli-dev/setup-cli-action@v2

with:

version: ${{ vars.KOSLI_CLI_VERSION }}

- name: Attest coverage metrics to Kosli

if: ${{ github.ref == 'refs/heads/main' && (success() || failure()) }}

run: |

KOSLI_COMPLIANT=$([ "${{ steps.coverage_metrics.outcome }}" == 'success' ] && echo true || echo false)

kosli attest generic \

--name=differ.unit-test-coverage-metrics \

--user-data="./reports/server/coverage_metrics.json"

The name of our generic attestation is differ.unit-test-coverage-metrics which we add to our Flow template file. For example:

version: 1

trail:

...

artifacts:

- name: differ

attestations:

...

- name: unit-test-coverage-metrics

type: generic

...

Each kosli attest generic command adds its compliance evidence to a Kosli Trail.

Summary

The kosli attest generic command is “type-less”. It can attest anything, but Kosli cannot calculate a true/false compliance value for you.

Often the tool you are using will output a zero/non-zero exit status, and you can easily capture the result. If not, typical steps are:

- Gather the data you are interested in.

- Define compliance threshold rules for this data.

- Compare the data gathered against these thresholds to produce a true/false compliance result.

- Report this result to Kosli using the

kosli attest genericCLI command.

Note: sometimes you can use the kosli attest junit command instead. For example, the rubocop Ruby linter has a --format=junit flag to produce its lint output in JUnit xml format see example. You can then report Rubocop lint evidence to Kosli using kosli attest junit see example